Simplifying key machine learning terms: demystifying the jargon

For those interested in a deeper understanding of the latest machine learning developments or an understanding of how tools like Chat-GPT work

ChatGPT, DALL-E, and GPT-4 took the internet by storm. Even though AI research has been around for 80 years, the latest developments have captured the curiosity of millions inside and outside the tech industry, finally making AI mainstream #basic.

The hype also kicked off an AI arms race inside the industry. Large companies are competing to release the best models. Startups are racing to build the best products on top of these models: one in every five companies in Y-Combinator’s current cohort is building in the generative AI space #maythebestwin.

If you are interested in having a deeper understanding of the latest machine learning developments in your newsfeed or are interested in understanding how these models work, this post is for you. This article aims to establish an intuition around key machine learning terms to demystify some of the inner workings of these developments. Armed with this information, I tackle how language models are trained and how the transformer architecture works in separate posts.

Section I: Computers Speak Numbers — Explaining Embeddings

Ever wondered how computers understand concepts that humans perceive with our senses, like language and images? For example, users send text to ChatGPT and it generates a text response. How does ChatGPT understand what the user is saying? Embeddings. Computers don’t understand English; they understand numbers, vectors, and matrices. Embeddings are a way to map concepts to meaningful numbers. “Meaningful” in that similar concepts are physically close to each other on a graph.

For example, if you want to build a movie-recommendation model, you would need a way to embed movies. A potential embedding could consider where movies lie on a graph defined by kid-friendliness and humor:

Voila, the movie The Hangover can now be meaningfully represented by numbers. Based on this representation, if you’ve watched The Hangover, the recommendation system is more likely to recommend The Incredibles to you than Soul. It is a pretty bad recommendation, but hey it’s a start. To properly represent a movie many more traits would need to be represented, resulting in a longer embedding vector.

In GloVe, an online package with embeddings for English words used in Wikipedia, the embedding for the word “king” is a 50-digit vector - damn!

Images require embeddings as well. One very basic way to embed images is to represent each pixel by its color. Now an image is just a matrix of numbers, like so:

Determining how to embed a model’s input is part of the training process. This takes us to our next concept: what is a model and what does it mean to train it? Models are fundamental to machine learning.

Section II: The Basics of Models — Relating Models, Training, Testing, Parameters, and Tokens

A machine learning model is a computer program that finds patterns or makes decisions on data it hasn’t seen before. For example, a model could be built to predict how likely a startup is to IPO. A computer-vision model could be built to detect dogs in images. A model can be mathematically represented as something that takes an input and spits out an output. For example, the equation y=mx is a model.

A model must be trained (i.e - taught) to return the right output. Once the model has been trained, it must be tested on data it has never seen before to see how accurate it is. If the accuracy is high enough then the model is ready to be used. If not, the model must be retrained.

What is happening when a model is being trained? At its core, training involves finding parameter values (aka - weights) that result in the highest accuracy on unseen data. Parameters can be understood through this simple example: y=mx is a model with one parameter (namely, m). If you wanted to model how much people pay for ice cream and you had the following data, then you would set the parameter to 3 during the training process.

Now that the model is trained by setting the parameter to 3, even though we have no data about the amount paid in a group of 100, we can make a good guess.

When models are “large”, it means that they have a lot of parameters. It doesn’t necessarily mean that they are trained on a lot of data — though the two are correlated. Further, a model’s size determines how much space (KB, MB, etc) it takes up. Contrary to common belief, increasing a model size does not always lead to better results. Instead, it might cause overfitting where the model “memorizes” the training data as opposed to learning something general. Hence, finding the goldilocks number for a model’s size is an art.

While talking about inputs, it’s worth mentioning tokens. You might have heard that “GPT-3 only accepts 4000” tokens. Tokenization cuts the input data into meaningful parts (or tokens). When dealing with images, tokenization involves splitting the image into patches. When dealing with language, tokenization involves splitting text into frequent words/phrases. If you’re confused between tokens and embeddings, here is a comparison to help:

To wrap up the discussion on models, let's consider how an iPhone can identify the species of plants/animals in your photos. It’s a pretty cool feature if you haven’t used it before:

I used to think that Apple was comparing my image to millions of other images of plants and animals and identifying the closest match, a huge computational feat 😵💫. That is not what is happening. Apple has already trained a model that is good at identifying species on powerful computers. After the training is complete, they are left with a complicated equation (a model) that accepts photos as inputs. This model is either stored locally on my phone or on the cloud depending on whether the species-identification feature needs to work offline or not. When I use this feature, my image gets inputted into this model. The photo gets tokenized, and each token gets embedded and turned into vectors. Then, a bunch of linear algebra functions happen with the parameters chosen during training, finally returning the species. 🌱

In summary, parameters can be thought of as numbers that encode what the model learned from being exposed to the training data. A model’s size is the number of parameters it has and it determines how much space the model takes up. Further, Model = embedded(input) * parameters = output. The example model y=mx has 1 parameter, but GPT-3 has 175 billion parameters 🤯

Section III: AI for Language — LMs, LLMs, NLP, Neural Networks

Having addressed key concepts used across all forms of machine learning, we can delve into a niche of AI that is particularly hot these days: natural language processing (NLP). NLP focuses on giving computers the ability to understand written and spoken language in the same way human beings can. In short, it is AI x Linguistics.

A specific type of model in NLP is language models, a notable example being GPT-3. Language models are built to predict the next word given part of a sentence. When a computer predicts the next word accurately again and again, it ends up generating meaningful content (ex: blog posts written by ChatGPT). The language model that most of us interact with on a day-to-day basis is autocomplete on your smartphone keyboard.

It follows from what we already know that large language models or LLMs are just models that predict the next word and have a lot of parameters (on the scale of hundreds of millions). Some of the most famous LLMs are GPT, BERT, and T-5. Here is how they compare in size:

Thus far we have treated models as black boxes — you input some data and boom you get the output. Looking inside this black box, one model structure frequently used in language models is neural networks. They are named as such because they’re structured to loosely mimic how neurons in our brains work.

The neural network diagram can seem intimidating, but it is just a sequence of layers. The first layer accepts the input, the final layer is the output, and everything in between is called hidden layers. The input data enters the first layer and gets passed through the nodes in each layer while getting processed until it reaches the final layer. The model’s weights/parameters which are chosen during the training process are represented by the connections between the nodes.

Looking at how the data gets passed between nodes in each layer, every node in the neural network has a value between 0 and 1, called activation. The activation is the sum of the weights and activations from the incoming nodes in the previous layer. If the activation is below a threshold value, the node passes no data to the next layer. If the number exceeds the threshold value, the node “fires,” which means it passes the processed data to all its outgoing connections.

Conclusion

And that’s a wrap! I hope this post was helpful. If you found this interesting, I have two articles coming up very soon on simplifying: how language models are trained and how the transformer architecture works. Subscribe if you are interested in having them sent to your inbox.

If you have any feedback or corrections, send them my way. If you have any questions, leave a comment.

And if you think anyone else would appreciate this post, kindly share it with them.

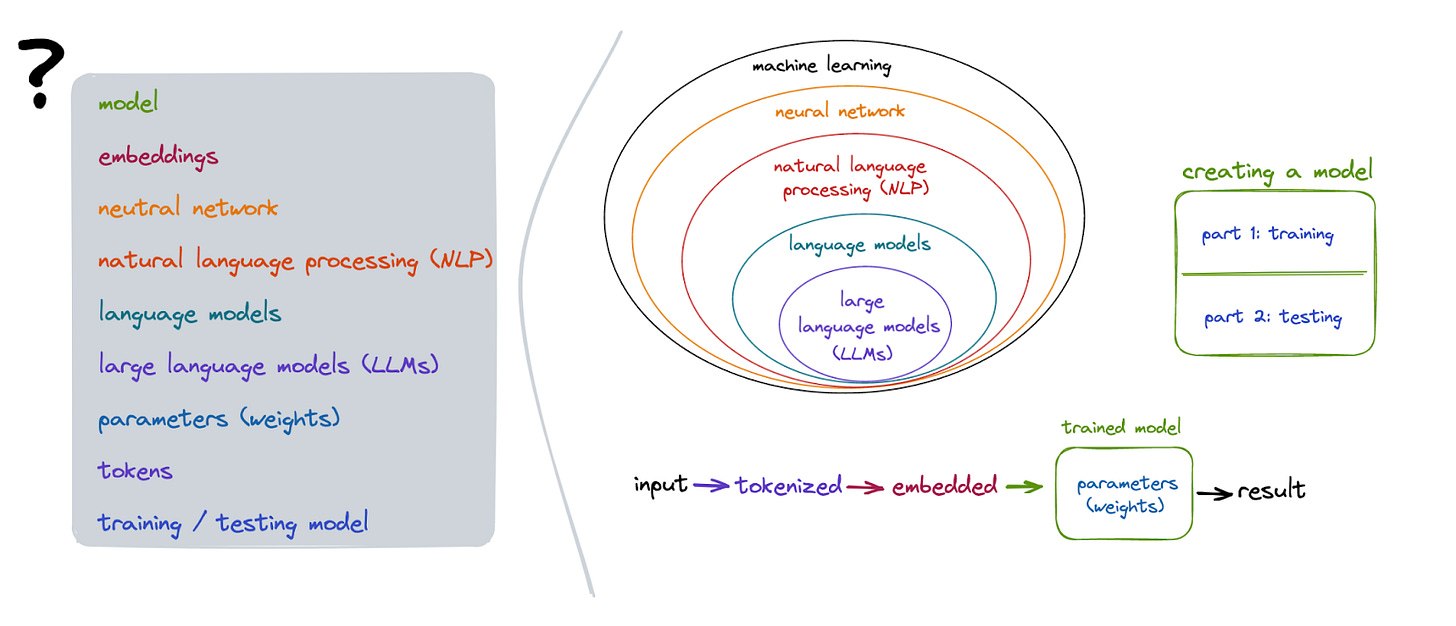

Here is how all the terms discussed in this post visually relate to each other:

special thanks to Ishaan Jaffer for his candid thoughts while I was drafting this

Very educative for a tech dumny like me . Still not sure I get it all but it’s a start . Thanks Janvi.

Great post!! Very well explained